![]() Currently, one of the most pressing questions in science communication is what impact does participating in these kind of activities have on individual scientists. These impacts are difficult to quantify as many are indirect, ephemeral, and often considerably delayed. Of course, scientists, administrators, and funding agencies also want to quantify how these impacts directly affect the metrics–grant dollars generated, number of published scientific papers, and the number of citations a paper receives–we use to evaluate researchers.

Currently, one of the most pressing questions in science communication is what impact does participating in these kind of activities have on individual scientists. These impacts are difficult to quantify as many are indirect, ephemeral, and often considerably delayed. Of course, scientists, administrators, and funding agencies also want to quantify how these impacts directly affect the metrics–grant dollars generated, number of published scientific papers, and the number of citations a paper receives–we use to evaluate researchers.

Liz Neeley and I discussed this in our 2014 paper.

In terms of social media outreach, or outreach in general, the impact on a scientist’s career remains largely unquantified and quite possibly indirect. “Many faculty members identified their primary job responsibilities as research and post-secondary teaching. They felt that outreach participation hindered their ability to fulfill those responsibilities and might be an ineffective use of their skills and time, and that it was not a valid use of their research funding”. In the survey by Ecklund et al., 31% of scientists felt that research university systems value research productivity, as indexed by grants and published papers, over everything else, including outreach. With this prioritization structure in place outreach may be perceived as unrelated to a scientist’s academic pursuits.

Perhaps because of both the ease of quantification and the impact is hypothesized to be direct, one specific question continues to generate considerable attention. If a paper receives a significant number of social media mentions does it also receive a significant number of citations? If this correlation exist then this would support an argument that Tweeting, Facebooking, etc. about your scientific papers. This science communication would increase the exposure of your paper, including to scientists, eventually leading to more citations of that paper. In this were true the impact of science communication would be direct and impact a metric that is used to evaluate scientists.

One of the largest studies on this topic, in analysis of 1.4 million documents published in PubMed and Web of Science published from 2010 to 2012, Haustein et al. found no correlation between a paper or a journals citation count and Twitter mentions. However, multiple studies since do find a link between Tweets and citations rates including the papers of Peoples et al. and de Winter.

A new paper by Finch et al. finds a link between social media mentions an citations also exists in the orthinology literature. The authors set up the question nicely in the introduction

Weak positive correlations between social media mentions and future citations [5,8–10] suggest that online activity may anticipate or drive the traditional measure of scholarly ‘impact’. Online activity also promotes engagement with academic research, scholarly or otherwise, increasing article views and PDF downloads of PLoS ONE articles, for example [11,12]. Thus, altmetrics, and the online activity they represent, have the potential to complement, pre-empt and boost future citation rates, and are increasingly used by institutions and funders to measure the attention garnered by the research they support [13].

The findings? For a subset of 878 articles published in 2014, the group found that an increase in social media mentions, as indexed by the Altmetric Score, from 1 to 20 resulted in 112% increase in citation count from 2.6 to 5.5 citations per article.

So drop what you’re doing and start Tweeting about your most recent paper RIGHT NOW!

But wait…

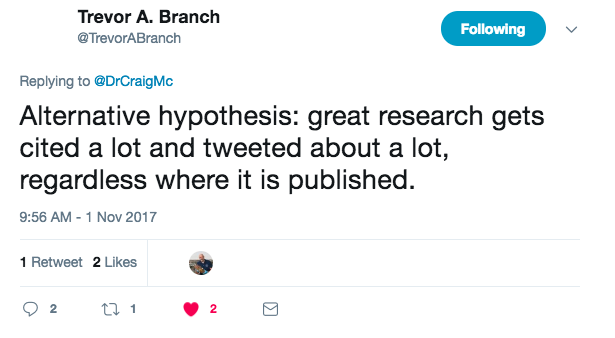

All of these studies show a correlation and not causation. Simply put, scientific papers with a lot of social media mentions also have lots of citations. One hypothesis would be that communicating your science broadly increases its exposure and increases the probability of citation. And it appears that often those advocating for science communication repeat this narrative despite there currently being no support for this hypothesis. Why is there no support?

Because the correlation between social media mentions and citations could be equally explained by other hypotheses.

So an equally likely explanation for this correlation is that papers that are popular garner both numerous social media mentions and eventually numerous citations.

Tom Webb also makes an outstanding point about the authors of such studies.

The authors of this most recent study note this overall causation and correlation dilemma as well.

Instead, our results suggest that altmetrics might provide an initial and immediate indicator of a research article’s future scholarly impact, particularly for articles published in more specialist journals…The correlative nature of this and other studies makes it difficult to establish any causal relationship between online activity and future citations

So what now? First stop arguing, as many did on Twitter today (examples here), that Tweeting about your paper is good thing because it will ultimately generate more citations. The jury is still out on this and will be until a study specifically is designed to test for the causation and not the correlation.

Share the post "Will Tweeting About Your Research Paper Get You More Citations? Meh."

Hey – thanks for this. I did a short thread here https://twitter.com/tomfinch89/status/925657608228409344 in which I attempt to highlight the correlation/causation issue (how are we doing on point 7?!). I appreciate that you acknowledge the fact that we acknowledge the fact that the result is correlative.

I think though that I’d put a pretty strong prior on there being at least some causal effect. Particularly for more specialist articles in ‘lesser’ journals, Twitter activity (etc.) *must* help make a paper more visible? Having said that, ‘possibly generating future citations’ isn’t near the top of the list of reasons why I use Twitter.

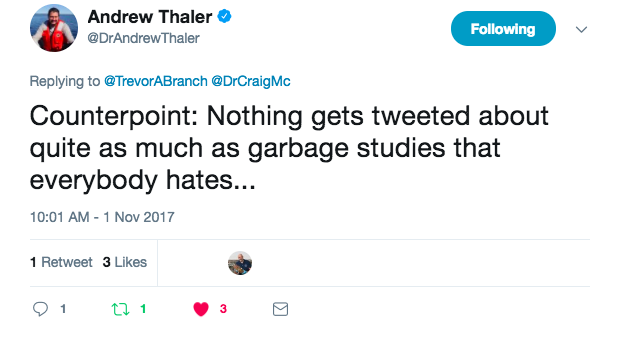

There’s also the question of what causes a paper to get mentioned. Hopefully ours isn’t one of the ‘garbage studies’ highlighted by Andrew Thaler (fwiw, I think these must be pretty rare), but a bunch of the mentions on Twitter have been to do with e.g. nominative determinism and meta-tweeting. I don’t think this really counts as science engagement, but Altmetric still counts it all.

I think correlational research is still worth doing. If someone can stand on our shoulders and work out how to test this experimentally, then great. But whatever their findings, I’ll probably keep using Twitter.

I think it is also worth adding that the science value of all this is also community dependant. We have a mature community in ornithology and some very well used tags (especially #ornithology) which are predominantly science-based content (there are some gatecrashers with pics of birds, trying to flog stuff to fieldworkers) but I’d guess 95% of content is peer-to-peer promotion of their research, rather than wider public engagement. So in this context, those following our community tags are very research focused.

In this context I also agree with Tom that the ‘garbage’ end of the spectrum is pretty rare in our sector and tends to come from those picking up content from outside our community (e.g. the handful of nominative determinism tweets around our paper)

I think it is also worth highlighting the difference we found between lower and higher impact factor journals (JIFs). Papers published in higher JIFs do already perform well re. citations against lower JIFs, but our study found an increased Altmetric Attention Score for a paper in the lower JIFs saw a larger increase/probability of cites/citation than the higher JIFs. Smaller, lower JIFs haven’t the clout of the higher JIF titles so need to shout louder and harder to be heard and get seen, and I think this is where social media in particular plays an important role for these titles and their authors.